搭建开启Kerberos认证的Hadoop Yarn集群 |

SuperMap iServer 分布式分析支持Hadoop Yarn集群,您可以参照以下流程自行搭建。本章主要介绍如何搭建使用开启Kerberos认证的Hadoop Yarn集群。

搭建 开启Kerberos认证的Hadoop Yarn集群环境需要配置 Java 环境(JDK 下载地址http://www.oracle.com/technetwork/java/javase/downloads/index-jsp-138363.html#javasejdk,建议使用 JDK 8及以上版本)、配置SSH以及 hadoop。

本例使用的软件为:

Hadoop安装包:hadoop-2.7.3.tar.gz 存放路径:/home/iserver

JDK安装包:jdk-8u131-linux-x64.tar.gz

Jsvc安装包:commons-daemon-1.0.15-src.tar.gz

Kerberos客户端安装包(windows):kfw-4.1-amd64.msi

本例主要是在两台centos7虚拟机(内存均为12g)上搭建1个master、1个worker的Hadoop Yarn集群

机器名 ip 内存 进程

master 192.168.112.162 10G namenode、resourcemanager、kerberos server

worker 192.168.112.163 10G datanode、nodemanager

注意以下两点:

关闭master及各worker防火墙

新建用户组,命令如下:

groupadd hadoop

新建用户hdfs、yarn,其中需设定userID<1000,命令如下:

useradd -u 501 hdfs -g hadoop

useradd -u 502 yarn -g hadoop

并使用passwd命令为新建用户设置密码

passwd hdfs 输入新密码

passwd yarn 输入新密码

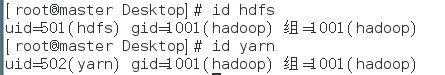

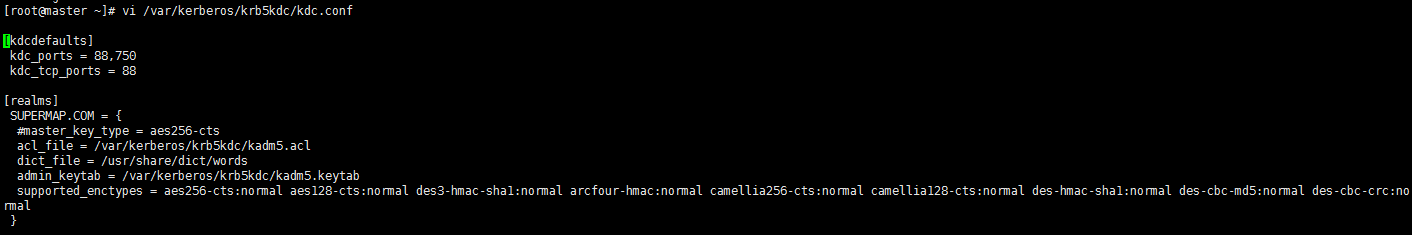

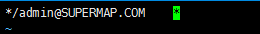

用户建好后,用id user命令查看用户信息如下图所示

id_rsa 私钥文件

id_rsa.pub 公钥文件

ssh-copy-id -i /home/hdfs/.ssh/id_rsa.pub ip

其中,如果在master上执行,则写worker节点对应的ip

ssh-copy-id -i /home/hdfs/.ssh/id_rsa.pub ip

注意:如果commons-daemon-1.0.15-src.tar.gz中已自带jsvc,则无需执行a、b,直接执行c即可。

yum install krb5-server (服务)

yum install krb5-workstation krb5-libs krb5-auth-dialog(客户端)

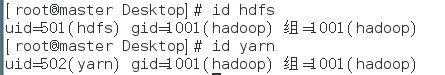

注意:/etc/krb5.conf (Master、Worker节点都得放,而且文件内容必须相同)

修改后的文件如下图所示:

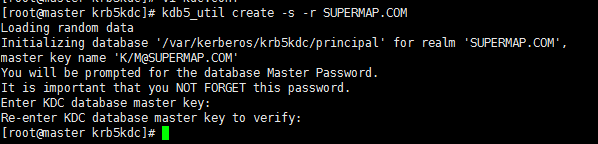

创建Kerberos数据库,需要设置管理员密码,创建成功后会在/var/Kerberos/krb5kdc/下生成一系列文件,如果重新创建的话,需要先删除/var/kerberos/krb5kdc下面principal相关文件。

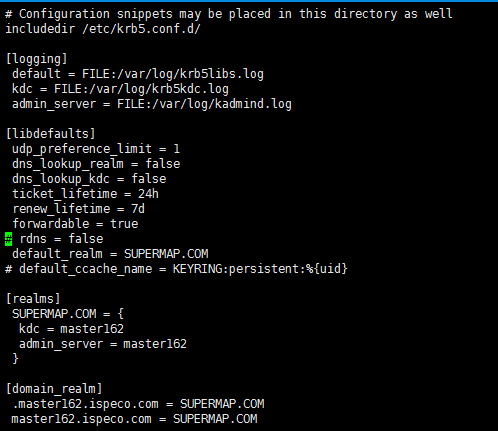

需在Master节点的root用户下执行以下命令:

kdb5_util create -s -r SUPERMAP.COM

执行成功后如下图所示:

注意:数据库创建成功后,需重启krb5服务

krb5kdc restart

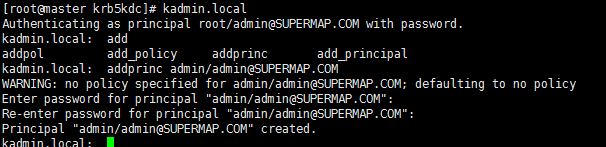

在Master节点的root用户下分别执行以下命令:

kadmin.local

addprinc admin/admin@SUPERMAP1.COM

如下图所示:

kadmin.local

#创建用户

addprinc -randkey yarn/master162.ispeco.com@SUPERMAP.COM

addprinc -randkey yarn/worker163@SUPERMAP.COM

addprinc -randkey hdfs/master162.ispeco.com@SUPERMAP.COM

addprinc -randkey hdfs/worker163@SUPERMAP.COM

#生成密钥文件(生成到当前路径下)

xst -k yarn.keytab yarn/master162.ispeco.com@SUPERMAP.COM

xst -k yarn.keytab yarn/worker163@SUPERMAP.COM

xst -k hdfs.keytab hdfs/master162.ispeco.com@SUPERMAP.COM

xst -k hdfs.keytab hdfs/worker163@SUPERMAP.COM

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.112.162:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/iserver/hadoop-2.7.3/tmp</value>

<description>Abasefor other temporary directories.</description>

</property>

<property>

<name>hadoop.security.authentication</name>

<value>kerberos</value>

</property>

<property>

<name>hadoop.security.authorization</name>

<value>true</value>

</property>

<property>

<name>hadoop.rpc.protection</name>

<value>authentication</value>

</property>

<property>

<name>hadoop.security.auth_to_local</name>

<value>DEFAULT</value>

</property>

</configuration>

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>192.168.112.162:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>192.168.112.162:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>192.168.112.162:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>192.168.112.162:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>192.168.112.162:8088</value>

</property>

<!--YARN kerberos security-->

<property>

<name>yarn.resourcemanager.keytab</name>

<value>/var/kerberos/krb5kdc/yarn.keytab</value>

</property>

<property>

<name>yarn.resourcemanager.principal</name>

<value>yarn/master162.ispeco.com@SUPERMAP.COM</value>

</property>

<property>

<name>yarn.nodemanager.keytab</name>

<value>/var/kerberos/krb5kdc/yarn.keytab</value>

</property>

<property>

<name>yarn.nodemanager.principal</name>

<value>yarn/master162.ispeco.com@SUPERMAP.COM</value>

</property>

<property>

<name>yarn.nodemanager.container-executor.class</name>

<value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value>

</property>

<property>

<name>yarn.nodemanager.linux-container-executor.group</name>

<value>hadoop</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/home/iserver/hadoop-2.7.3/local</value>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/home/iserver/hadoop-2.7.3/log</value>

</property>

</configuration>

<configuration>

<property>

<name>dfs.https.enable</name>

<value>false</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>192.168.112.162:50070</value>

</property>

<property>

<name>dfs.https.port</name>

<value>504700</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>192.168.112.162:9001</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/iserver/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/iserver/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<property>

<name>dfs.encrypt.data.transfer</name>

<value>true</value>

</property>

<property>

<name>dfs.block.access.token.enable</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.kerberos.principal</name>

<value>hdfs/master162.ispeco.com@SUPERMAP.COM</value>

</property>

<property>

<name>dfs.namenode.keytab.file</name>

<value>/var/kerberos/krb5kdc/hdfs.keytab</value>

</property>

<property>

<name>dfs.namenode.kerberos.internal.spnego.principal</name>

<value>HTTP/master162.ispeco.com@SUPERMAP.COM</value>

</property>

<property>

<name>dfs.web.authentication.kerberos.keytab</name>

<value>/var/kerberos/krb5kdc/hdfs.keytab</value>

</property>

<property>

<name>dfs.web.authentication.kerberos.principal</name>

<value>http/master162.ispeco.com@SUPERMAP.COM</value>

</property>

<property>

<name>dfs.namenode.kerberos.https.principal</name>

<value>host/master162.ispeco.com@SUPERMAP.COM</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>192.168.112.162:50090</value>

</property>

<property>

<name>dfs.secondary.namenode.keytab.file</name>

<value>/var/kerberos/krb5kdc/hdfs.keytab</value>

</property>

<property>

<name>dfs.secondary.namenode.kerberos.principal</name>

<value>hdfs/master162.ispeco.com@SUPERMAP.COM</value>

</property>

<property>

<name>dfs.secondary.namenode.kerberos.internal.spnego.principal</name>

<value>HTTP/master162.ispeco.com@SUPERMAP.COM</value>

</property>

<property>

<name>dfs.datanode.kerberos.principal</name>

<value>hdfs/master162.ispeco.com@SUPERMAP.COM</value>

</property>

<property>

<name>dfs.datanode.keytab.file</name>

<value>/var/kerberos/krb5kdc/hdfs.keytab</value>

</property>

<property>

<name>dfs.encrypt.data.transfer</name>

<value>false</value>

</property>

<property>

<name>dfs.datanode.data.dir.perm</name>

<value>700</value>

</property>

<property>

<name>dfs.datanode.address</name>

<value>192.168.112.163:1004</value>

</property>

<property>

<name>dfs.datanode.http.address</name>

<value>192.168.112.163:1006</value>

</property>

<property>

<name>dfs.datanode.https.address</name>

<value>192.168.112.163:50470</value>

</property>

</configuration>

yarn.nodemanager.linux-container-executor.group=hadoop

#configured value of yarn.nodemanager.linux-container-executor.group

banned.users=hdfs

#comma separated list of users who can not run applications

min.user.id=0

#Prevent other super-users

allowed.system.users=root,yarn,hdfs,mapred,nobody

##comma separated list of system users who CAN run applications

export JAVA_HOME=/home/supermap/java/jdk1.8.0_131

export JSVC_HOME=/home/supermap/hadoop/hadoop-2.7.3/libexec

如果需要调试,则添加:

export HADOOP_OPTS="$HADOOP_OPTS -Dsun.security.krb5.debug=true"

export JAVA_HOME=/home/supermap/java/jdk1.8.0_131

master(Master所在主机名)

worker(Worker所在主机名)

Hadoop安装包放于所属root并且是权限是755的目录下

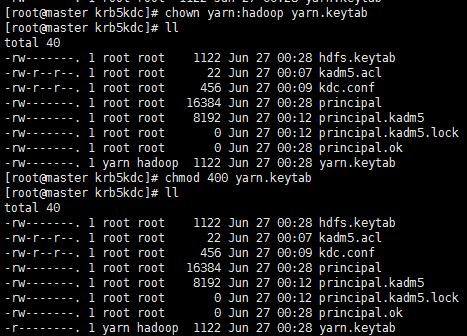

以下文件需要单独配置相应的权限与用户组:

| 对应的参数/文件 | 所属文件 |

需设置成用户:组 |

权限 |

|

dfs.namenode.name.dir |

hdfs-site.xml |

hdfs-site.xml |

drwx------ (700) |

|

dfs.datanode.data.dir |

hdfs-site.xml |

hdfs-site.xml |

drwx------ (700) |

|

$HADOOP_LOG_DIR |

hadoop_env.sh |

hdfs:hadoop |

drwxrwxr-x(775) |

|

$HADOOP_YARN_HOME/logs |

yarn-env.sh |

hdfs:hadoop |

drwxrwxr-x(775) |

|

yarn.nodemanager.local-dirs |

yarn-site.xml |

yarn:hadoop |

drwxr-xr-x(775) |

|

yarn.nodemanager.log-dirs |

yarn-site.xml |

yarn:hadoop |

drwxr-xr-x(755) |

|

container-executor |

hadoop安装目录/bin/ |

root:hadoop |

--Sr-s--*(6050) |

|

container-executor.cfg |

hadoop安装目录/etc/haddop/ |

root:hadoop |

r-------*(400) |

|

tmp |

hadoop安装目录/ |

hdfs:hadoop |

- |

|

start-dfs.sh |

hadoop安装目录/sbin |

hdfs:master |

- |

|

start-secure-dns.sh |

root:maste |

||

|

start-yarn.sh |

yarn:master |

在Master节点下的HADOOP安装目录下执行以下命令:

启动Kerberos服务(root用户)

krb5kdc start

在用户hdfs下的格式化集群(仅第一次安装时或者修改了hadoop相关设置后需要执行该步)

[hdfs@master bin]$ ./hadoop namenode –format

启动集群

[hdfs@master sbin]$ ./start-dfs.sh

[root@master sbin]# ./start-secure-dns.sh

[yarn@master sbin]$ ./start-yarn.sh

如需停止集群

[yarn@master sbin]$ ./stop-yarn.sh

[root@master sbin]# ./stop-secure-dns.sh

[hdfs@master sbin]$ ./stop-dfs.sh

yarn集群:访问master节点IP:8088

hadoop集群: 访问master节点IP:50070